Gurbaksh Singh: Decoding the code behind The Unfiltered History Tour at British Museum

VICE Media’s The Unfiltered History Tour powerfully narrates the story behind the British Museum’s most disputed artefacts through immersive Instagram filters. Here Gurbaksh Singh (pictured bottom), Chief Innovation Officer, dentsu international, India provides a breakdown on this intersection of technology with creativity.

Growing up in India, we all have heard from our grandparents about the British Empire and stories of how some of India’s most valuable possessions were taken to Britain over time. Well, India wasn’t alone in this regard, the British Empire had left several countries and communities traumatised during their reign.

Knowing all of this, our intentions towards this project were never lukewarm. We were adamant to highlight the other side of history, the side of history narrated by the silenced voices of communities that were once under the control of the British Empire – and for us to be able to do justice to our intentions, and to all the communities we’re representing, we needed to perfect every aspect of the project with great sensitivity and accuracy.

The inception:

In the initial stages of this campaign, we had our intention set in stone. But we weren’t too sure on how to approach the execution or even narrow down on the ideal platform that would best suit our intended outcome.

Over time, we kept chatting on how we imagined our final execution to look like, the kind of impact it could echo – that’s when a thought occurred. “What if we could teleport the artefacts back to their homeland?”. That was what we called our Eureka moment during the course of this project.

With little to no idea on our final execution and approach, we decided to explore all possibilities and spent a good amount of time and resources researching the latest technology that could be incorporated. And that is where we spent all our research and development budget.

Why Instagram?

If we had clarity on one thing about this project, it was that this idea needed to build experiences, on a large scale. It had to be a social-first platform for it to create the impact we had set our mind onto.

Fun fact: “Instagram was not the initial execution route and even the first filter has no audio!”

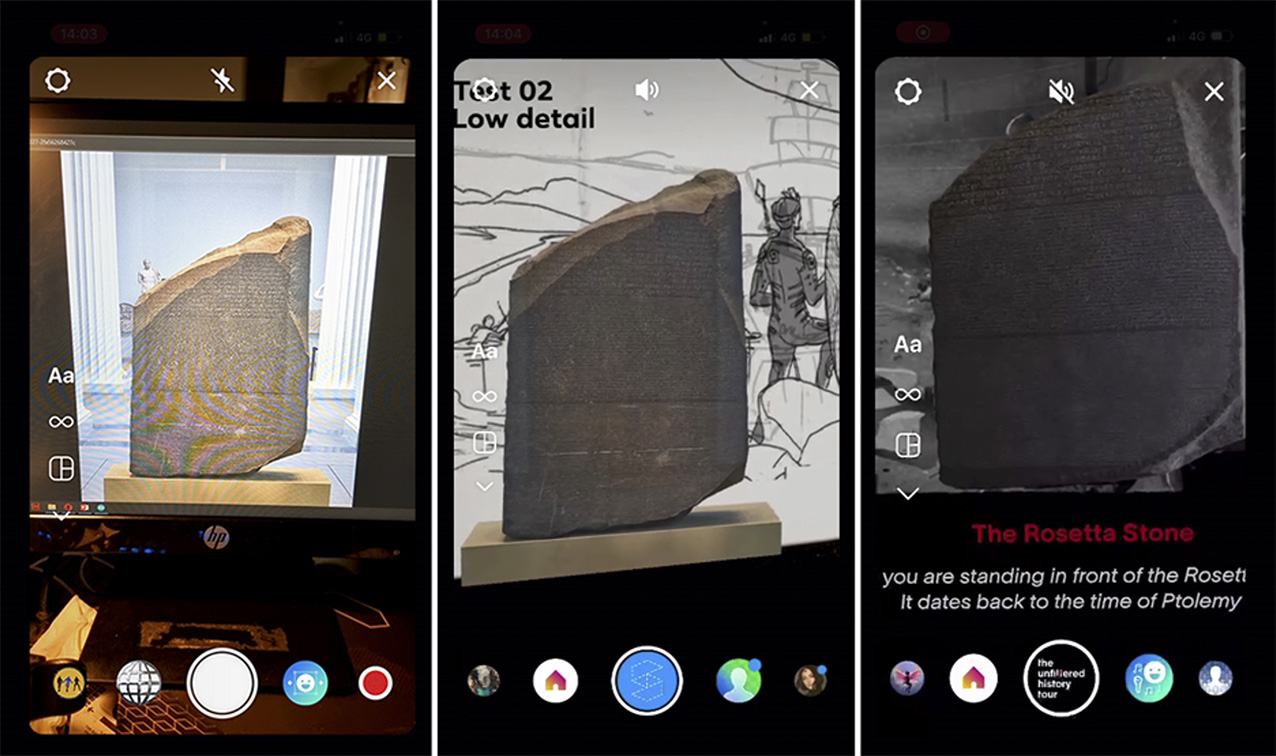

Finally, we locked our final execution platform to leverage Instagram’s Augmented Reality Filters and deep-dive into an immersive audio-visual experience.

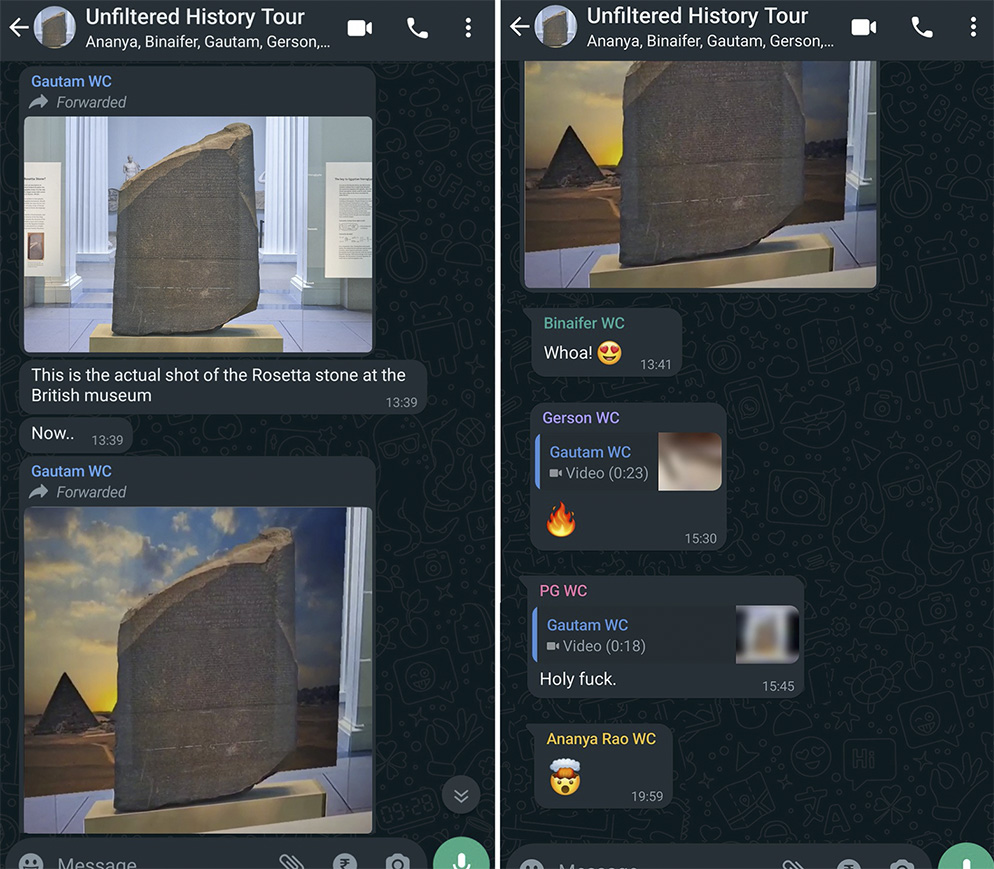

We then made a 2D Instagram filter prototype and presented it to the larger team. The reactions we received were clear that we’re on the right path – which was then followed by a series of revelations on our next steps.

Team reactions when they saw the first demo filter in action.

Our enthusiasm was accelerated by the enthusiasm of the larger team, we quickly started building our final execution roadmap, and that’s when we realised that we have an issue at hand. Typically, Instagram filters are not meant to scan life-size 3D objects, which in our case, had to scan 10 feet tall artefacts.

This pushed us to explore the boundaries and width of Instagram’s AR filters and to make the most of the limited resources available on Instagram. Resulting in the disintegration of Instagram’s face filter technology and repurposing it to an entirely new use case, for the very first time.

The lockdown makeshift:

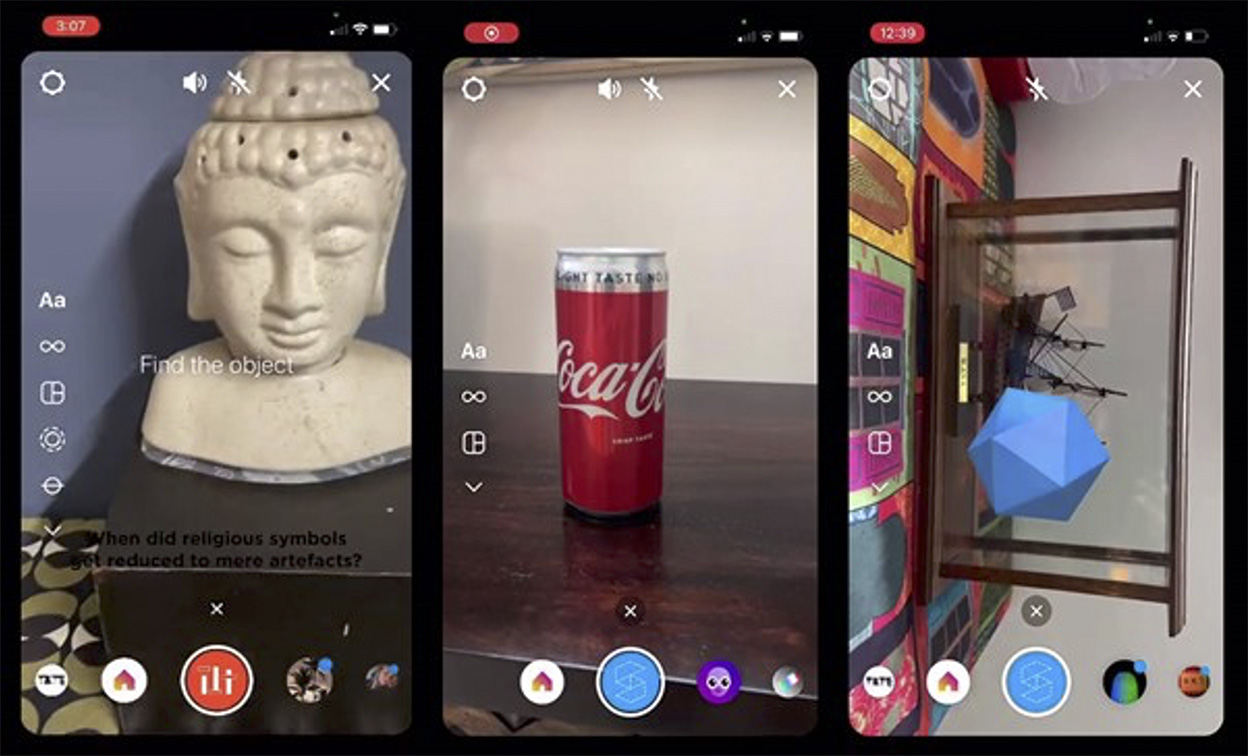

The initial stages of tech refinement happened during the lockdown which forced us to work with limited available resources.

From teleporting a soda can to house showpieces, we tried everything during the lockdown to simulate the British Museum experience at home, while testing early-stage prototypes.

Check out this early stage filter in action.

The limitations:

We began working with our local team in London and started gathering data on measurements, placements, lighting & mapping for our selected artefacts, remotely.

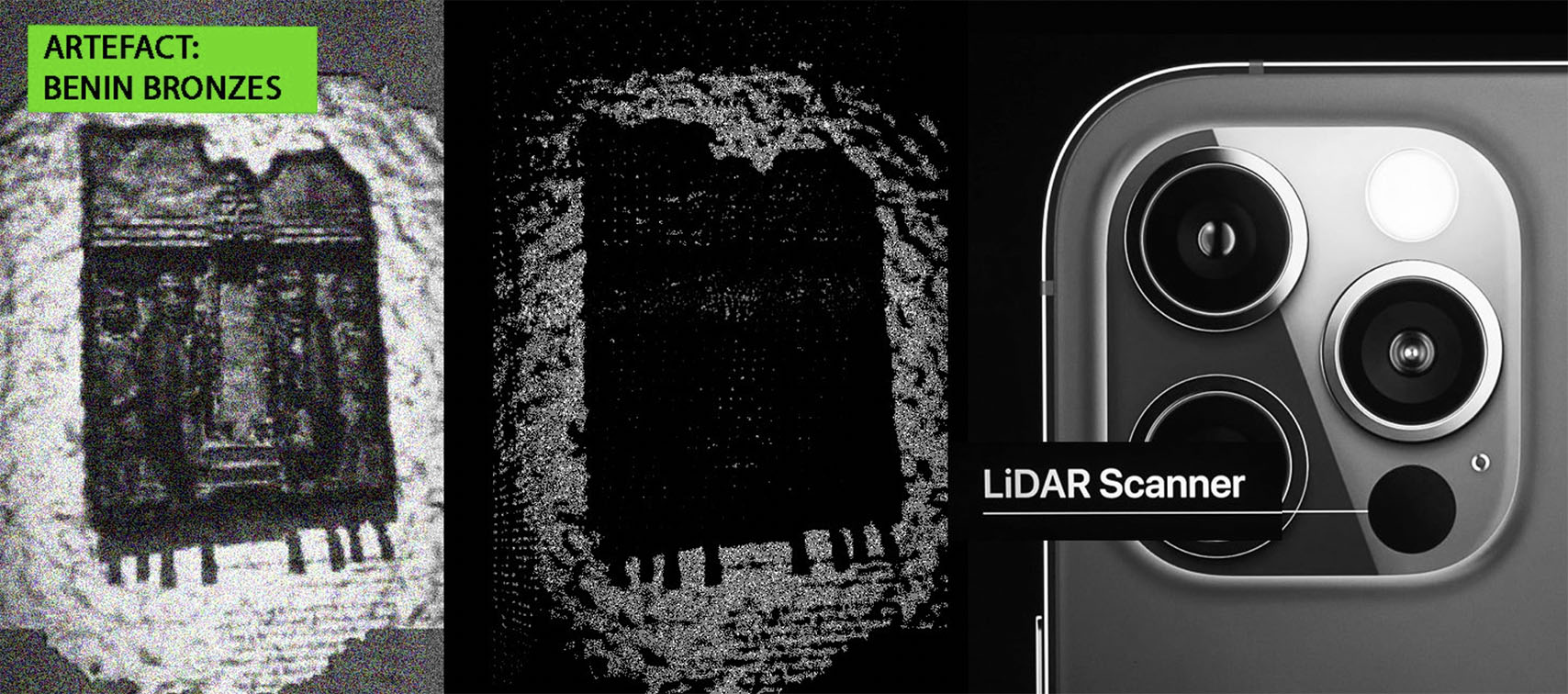

We zeroed down on a technology called ‘LiDAR’ (Light Detection and Ranging), which was initially developed for military usage. We planned to use it for taking accurate measurements & mapping data for artefacts, but we also needed to keep the entire operation undercover – we couldn’t hide big commercial scanners, so we found a civilian alternative – LiDAR which is compatible with iPhone 12.

Every artefact had its own history and challenges:

We encountered a major hurdle with the Gweagal Shield. The specific artefact was placed at a very tricky location inside a glass enclosure at the British Museum. The outside light falling on it from the two big casements was impacting its lighting & reflection conditions throughout the day. The situation at hand was way out of our control.

This pushed us harder, and we had to unlearn our traditional process on Spark AR.

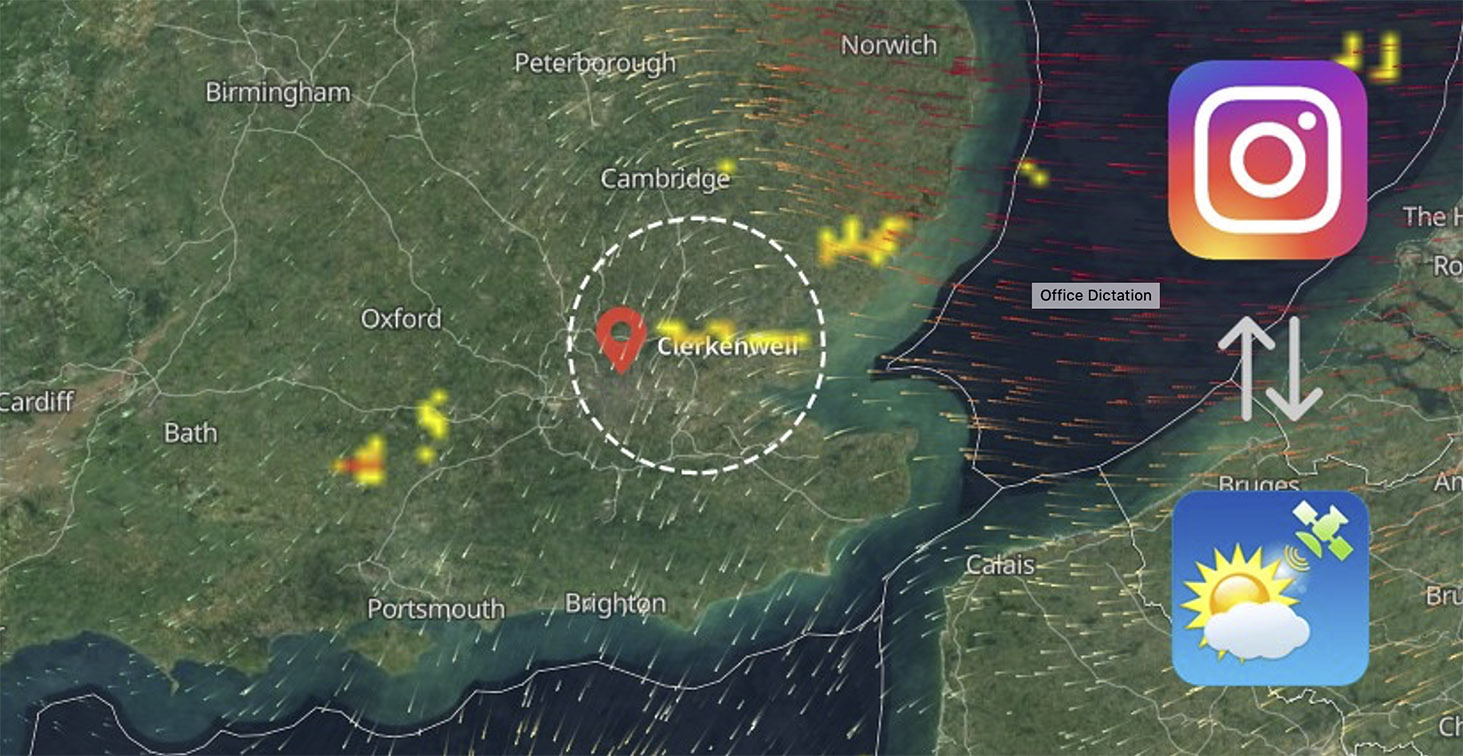

We attempted to develop an Instagram filter based on real-time weather to dynamically adapt to the changing environment at the museum. We mapped satellite data in a way we could fetch LIVE weather updates for just a 3KMs radius of the British Museum instead of the entirety of London.

Unexpected encounter:

The British Museum went under lockdown during the second wave of COVID-19, but when it reopened again, we aligned the team for the on-ground filter testing and what we saw left us in a deep state of shock.

Post the pandemic, the British Museum was a completely different place, from a tech perspective.

The entire tech was mapped and built according to the British Museum floor plan while keeping an assumption that it was a constant.

But to our surprise, everything had changed. Many artefacts were moved from their original places, few of the exhibits were temporarily closed, to balance the ventilation – some windows were kept open, while the others closed, which severely impacted the lighting and resulted in new reflections emerging around the artefacts.

This made the filter scan even more difficult to work. It wouldn’t be an understatement to say that this definitely drove us crazy.

It wasn’t long before we started to get nightmares about failing the ambitions and the work that went behind the project. After spending so many months on the project, the pressure was at an all-time high. However, we kept our eyes set on our goal and kept going.

Un-hurdle:

After working on this project for more than 500 days, we lost count of the number of prototypes we scraped (A raw number must be between 180-200 before locking the final route).

Developing IG filters by just depending on raw data, without being physically present was a good challenge, and if that wasn’t enough, testing the filter by a non-tech person was another massive hurdle.

Giving instructions line by line took a lot of time and effort. It was almost like maneuvering a rover on Mars! But despite several hindrances, we were all motivated to bring this project to life. Working closely with teams and contributors around the globe just reinforced our belief in the idea itself. We knew that this story needed to be shared and heard.

Using technology to narrate a story, which is set out to touch the lives of millions of people like ourselves and has the power to instil change in the world, is an overwhelming yet satisfying experience.

7 Comments

OMG! What a demonstration of tech power and will.

Amazing efforts resulted into awesome outcome.

How do you guys think of such crazy tech!!!! FAB!

Instagram can be so meaningful, never thought of that…

Is this open-source to use? @Gurbaksh Singh

Gweagal Shield fix is too cool ❗

Go0d one